AI Has a Hotness Problem

In the world of generated imagery, you’re either drop-dead gorgeous or a wrinkled, bug-eyed freak.

Listen to this article

Listen to more stories on hark

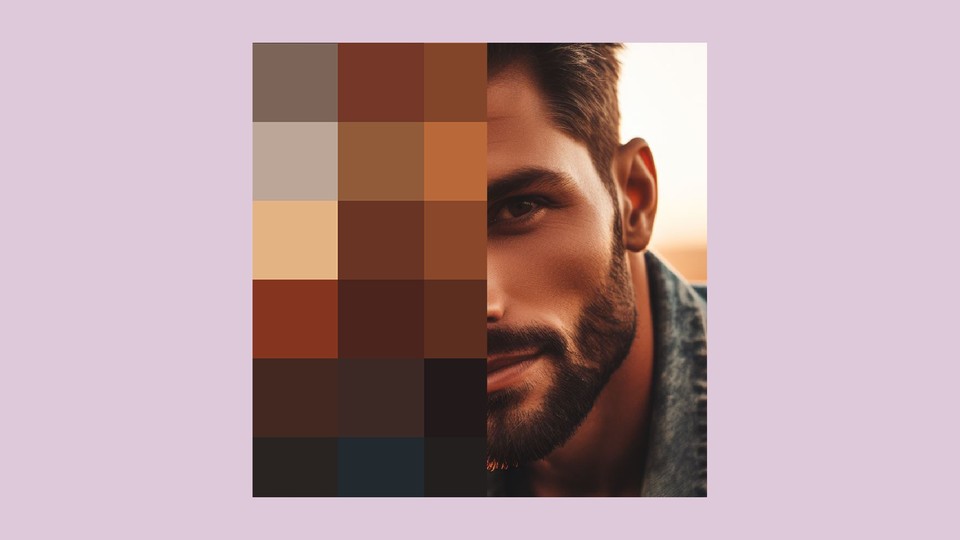

The man I am looking at is very hot. He’s got that angular hot-guy face, with hollow cheeks and a sharp jawline. His dark hair is tousled, his skin blurred and smooth. But I shouldn’t even bother describing him further, because this man is self-evidently hot, the kind of person you look at and immediately categorize as someone whose day-to-day life is defined by being abnormally good-looking.

This hot man, however, is not real. He is just a computer simulation, a photo created in response to my request for a close-up of a man by an algorithm that likely analyzed hundreds of millions of photos in order to conclude that this is what I want to see: a smizing, sculptural man in a denim jacket. Let’s call him Sal.

Sal was spun up by artificial intelligence. One day last week, from my home in Los Angeles (notably, the land of hot people), I opened up Bing Image Creator and commanded it to make me a man from scratch. I did not specify this man’s age or any of his physical characteristics. I asked only that he be rendered “looking directly at the camera at sunset,” and let the computer decide the rest. Bing presented me with four absolute smokeshows—four different versions of Sal, all dark-haired with elegant bone structure. They looked like casting options for a retail catalog.

Sal is an extreme example of a bigger phenomenon: When an AI image-generation tool—like the ones made by Midjourney, Stability AI, or Adobe—is prompted to create a picture of a person, that person is likely to be better-looking than those of us who actually walk the planet Earth. To be clear, not every AI creation is as hot as Sal. Since meeting him, I’ve reviewed more than 100 fake faces of generic men, women, and nonbinary people, made to order by six popular image-generating tools, and found different ages, hair colors, and races. One face was green-eyed and freckled; another had bright-red eye shadow and short bleached-blond hair. Some were bearded, others clean-shaven. The faces did tend to have one thing in common, though: Aside from skewing young, most were above-average hot, if not drop-dead gorgeous. None was downright ugly. So why do these state-of-the-art, text-to-image models love a good thirst trap?

After reaching out to computer scientists, a psychologist, and the companies that make these AI-generation tools, I arrived at three potential explanations for the phenomenon. First, the “hotness in, hotness out” theory: Products such as Midjourney are spitting out hotties, it suggests, because they were loaded up with hotties during training. AI image generators learn how to generate novel pictures by ingesting huge databases of existing ones, along with their descriptions. The exact makeup of that feedstock tends to be kept secret, Hany Farid, a professor at the UC Berkeley School of Information, told me, but the images they include are likely biased in favor of attractive faces. That would make their outputs prone to being attractive too.

The data sets could be stacked with hotties because they draw significantly from edited and airbrushed photos of celebrities, advertising models, and other professional hot people. (One popular research data set, called CelebA, comprises 200,000 annotated pictures of famous people’s faces.) Including normal-people pictures gleaned from photo-sharing sites such as Flickr might only make the hotness problem worse. Because we tend to post the best photos of ourselves—at times enhanced by apps that smooth out skin and whiten teeth—AIs could end up learning that even folks in candid shots are unnaturally attractive. “If we posted honest photos of ourselves online, well, then, I think the results would look really different,” Farid said.

For a good example of how existing photography on the internet could bias an AI model, here’s a nonhuman one: DALL-E seems inclined to make images of wristwatches where the hands point to 10:10—an aesthetically pleasing v configuration that is often used in watch advertisements. If the AI image generators are seeing lots of skin-care advertisements (or any other ads with faces), they could be getting trained to produce aesthetically pleasing cheekbones.

A second explanation of the problem has to do with how the AI faces are constructed. According to what I’ll call the “midpoint hottie” hypothesis, the image-generating tools end up generating more attractive faces as an accidental by-product of how they analyze the photos that go into them. “Averageness is more attractive in general than non-averageness,” Lisa DeBruine, a professor at the University of Glasgow School of Psychology and Neuroscience who studies the perception of faces, told me. Combining faces tends to make them more symmetrical and blemish free. “If you take a whole class of undergraduate psychology students and you average together all the women’s faces, that average is going to be pretty attractive,” she said. (This rule applies only to sets of faces of a single demographic, though: When DeBruine helped analyze the faces of visitors to a science museum in the U.K., for example, she found that the averaged one was an odd amalgamation of bearded men and small children.) AI image generators aren’t simply smushing faces together, Farid said, but they do tend to produce faces that look like averaged faces. Thus, even a generative-AI tool trained only on a set of normal faces might end up putting out unnaturally attractive ones.

Finally, we have the “hot by design” conjecture. It may be that a bias for attractiveness is built into the tools on purpose or gets inserted after the fact by regular users. Some AI models incorporate human feedback by noting which of their outputs are preferred. “We don’t know what all of these algorithms are doing, but they might be learning from the kind of ways that people interact with them,” DeBruine said. “Maybe people are happier with the face images of attractive people.” Alexandru Costin, the vice president for generative AI at Adobe, told me that the company tracks which images generated by its Firefly web application are getting downloaded, and then feeds that information back into the tool. This process has produced a drift toward hotness, which then has to be corrected. The company uses various strategies to “de-bias” the model, Costin said, so that it won’t only serve up images “where everybody looks Photoshopped.”

A representative for Microsoft’s Bing Image Creator, which I used to make Sal, told me that the software is powered by DALL-E and directed questions about the hotness problem to DALL-E’s creator, OpenAI. OpenAI directed questions back to Microsoft, though the company did put out a document earlier this month acknowledging that its latest model “defaults to generating images of people that match stereotypical and conventional ideals of beauty,” which could end up “perpetuating unrealistic beauty benchmarks and fostering dissatisfaction and potential body image distress.” The makers of Stable Diffusion and Midjourney did not respond to requests for comment.

Farid stressed that very little is known about these models, which have been widely available to the public for less than a year. As a result, it’s hard to know whether AI’s pro-cutie slant is a feature or a bug, let alone what’s causing the hotness problem and who might be to blame. “I think the data explains it up to a point, and then I think it’s algorithmic after that,” he told me. “Is it intentional? Is it sort of an emergent property? I don’t know.”

Not all of the tools mentioned above produced equally hot people. When I used DALL-E, as accessed through OpenAI’s site, the outputs were more realistically not-hot than those produced by Bing Image Creator, which relies on a more advanced version of the same model. In fact, when I prompted Bing to make me an “ugly” person, it still leaned hot, offering two very attractive people whose faces happened to have dirt on them and one disturbing figure who resembled a killer clown. A few other image generators, when prompted to make “ugly” people, offered sets of wrinkly, monstrous, orc-looking faces with bugged-out eyes. Adobe’s Firefly tool returned a fresh set of stock-image-looking hotties.

Whatever the cause of AI hotness, the phenomenon itself could have ill effects. Magazines and celebrities have long been scolded for editing photos to push an ideal of beauty that is impossible to achieve in real life, and now AI image models may be succumbing to the same trend. “If all the images we’re seeing are of these hyper-attractive, really-high-cheekbones models that can’t even exist in real life, our brains are going to start saying, Oh, that’s a normal face,” DeBruine said. “And then we can start pushing it even more extreme.” When Sal, with his beautiful face, starts to come off like an average dude, that’s when we’ll know we have a problem.