The Real Lesson From The Making of the Atomic Bomb

A generation of AI researchers treat Richard Rhodes’s seminal book like a Bible as they develop technology with the potential to remake—or ruin—our world.

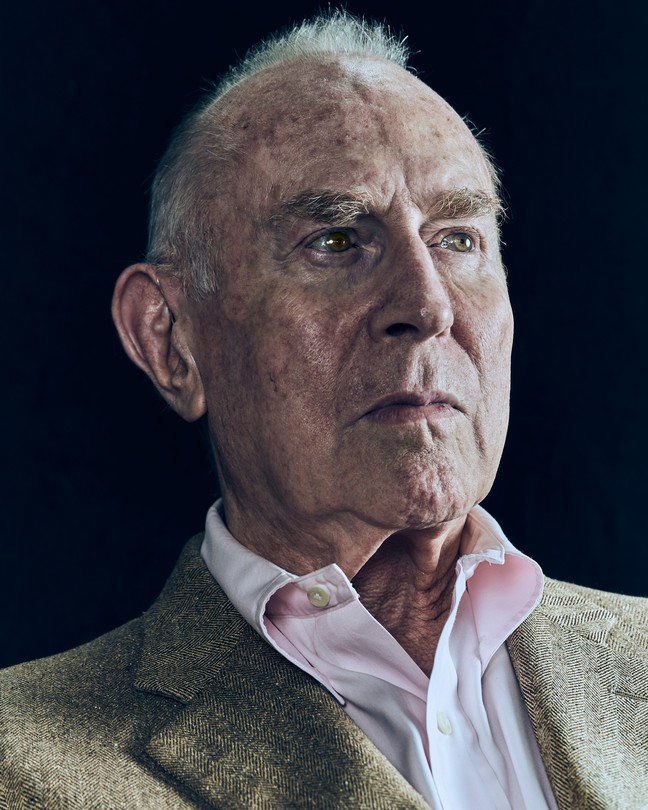

Doom lurks in every nook and cranny of Richard Rhodes’s home office. A framed photograph of three men in military fatigues hangs above his desk. They’re tightening straps on what first appear to be two water heaters but are, in fact, thermonuclear weapons. Resting against a nearby wall is a black-and-white print depicting the moments after the detonation of an atomic bomb: a thousand-foot-tall ghostly amoeba. And above us, dangling from the ceiling like the sword of Damocles, is a plastic model of the Hindenburg.

Depending on how you choose to look at it, Rhodes’s office is either a shrine to awe-inspiring technological progress or a harsh reminder of its power to incinerate us all in the blink of an eye. Today, it feels like the nexus of our cultural and technological universes. Rhodes is the 86-year-old author of The Making of the Atomic Bomb, a Pulitzer Prize–winning book that has become a kind of holy text for a certain type of AI researcher—namely, the type who believes their creations might have the power to kill us all. On Friday afternoon, he will take his seat in a West Seattle theater and, like many other moviegoers, watch Oppenheimer, Christopher Nolan’s summer blockbuster about the Manhattan Project. (The film is not based on his book, though he suspects his text served as a research aid; he’s excited to see it anyway.)

I first encountered The Making of the Atomic Bomb in March, when I spoke with an AI researcher who said he carts the doorstop-size book around every day. (It’s a reminder that his mandate is to push the bounds of technological progress, he explained—and a motivational tool to work 17-hour days.) Since then, I’ve heard the book mentioned on podcasts and cited in conversations I’ve had with people who fear that artificial intelligence will doom us all. “I know tons of people working on AI policy who’ve been reading Rhodes’s book for inspiration,” Vox’s Dylan Matthews wrote recently. A New York Times profile of the AI company Anthropic notes that Rhodes’s book is “popular among the company’s employees,” some of whom “compared themselves to modern-day Robert Oppenheimers.”

Like Oppenheimer before them, many merchants of AI believe their creations might change the course of history, and so they wrestle with profound moral concerns. Even as they build the technology, they worry about what will happen if AI becomes smarter than humans and goes rogue, a speculative possibility that has morphed into an unshakable neurosis as generative-AI models take in vast quantities of information and appear ever more capable. More than 40 years ago, Rhodes set out to write the definitive account of one of the most consequential achievements in human history. Today, it’s scrutinized like an instruction manual.

Rhodes isn’t a doomer himself, but he understands the parallels between the work at Los Alamos in the 1940s and what’s happening in Silicon Valley today. “Oppenheimer talked a lot about how the bomb was both the peril and the hope,” Rhodes told me—it could end the war while simultaneously threatening to end humanity. He has said that AI might be as transformative as nuclear energy, and has watched with interest as Silicon Valley’s biggest companies have engaged in a frenzied competition to build and deploy it.

AI boosters and builders would no doubt take comfort in an argument Rhodes once made, in the foreword to the 25th-anniversary edition of his book, that the discovery of nuclear fission, and thereby the bomb, was inevitable. “To stop it, you would have had to stop physics,” he writes. This argument echoes in the rhetoric of bullish AI companies and governments who see the technology as part of a global informational arms race. Democratic nations cannot pause or wait for laws to catch up, the logic goes, lest we lose out to China or some other hostile power.

That idea helps explain why a technologist would construct an AI system even as they believe it could extinguish human life—and so does the epigraph in the first section of The Making of the Atomic Bomb. Here Rhodes quotes Oppenheimer: “It is a profound and necessary truth that the deep things in science are not found because they are useful; they are found because it was possible to find them.”

As a technology writer, I have spent much of my career grappling with people who possess an impulse to build, consequences be damned. I’m fascinated and confounded by the mindset I’ve observed in AI founders and researchers who say they’re terrified of the very things they’re actively working to bring into existence. I’ve struggled to square this personality trait with my own inclinations: toward caution, toward a paralyzing obsession with matrices of unintended consequences. What is it, I asked Rhodes. What is the unifying quality that possesses people to open Pandora’s box? The question hung in the air, just below the dangling model of the Hindenburg, as I imagined Rhodes flipping through a set of interviews and dog-eared biographies in his head.

He began to explain. Any great scientist, “before their 12th year,” he said, has “some formative experience that pushed them in the direction they were going in, and made them decide they wanted to go through the grueling process of learning mathematics or science until they could push the boundaries.” Enrico Fermi, the inventor of the first nuclear reactor and a chief architect of the atomic bomb, lost a beloved brother as a teenager, and not long after that, he grew obsessed with measuring and quantifying all areas of his life. “He could tell you how many steps he’d walked down the street,” Rhodes said. “He appeared so much like someone who found in numbers the kind of certainty that he’d lost when he lost his brother.” As a 10-year-old, Leo Szilard had been so disturbed by a Hungarian epic about the sun dying out that he grew fixated on rockets as a way to save the planet, Rhodes said—a quest that, eventually, led him to discover the nuclear chain reaction.

“It’s no coincidence that so many of the people who ended up in the bomb program were Jews who had escaped from Nazi Germany,” he said. “They’d seen what was happening there, they were all around it, and they knew it was horrible and terrifying and had to be stopped.” Rhodes sees the shadows of his childhood in his own work too, which was marked by physical abuse and starvation at the hands of his stepmother: “It’s not surprising that all my books are, in some way, about human violence and how you deal with it, seeing as I’m an expert in that department.”

Perhaps this is another lesson in duality—in the grand scheme of things, our nightmares and dreams are of a piece. If there is a concept Rhodes wants AI researchers and founders to take away from The Making of the Atomic Bomb, it is the notion of complementarity. This is an idea from quantum physics that the Nobel Prize–winning Danish physicist Niels Bohr, who, according to Rhodes, traveled to Los Alamos to impart to Oppenheimer during the darkest days of the Manhattan Project. In very basic terms, complementarity describes how objects have conflicting properties that cannot be observed at the same time. The world contains multitudes.

Bohr, according to Rhodes, developed an entire philosophical worldview from this observation. It boils down to the notion that a terrible weapon might simultaneously be a wonderful tool. “Bohr’s idea brought hope to Los Alamos,” Rhodes said. “He told the physicists who were concerned about this weapon of mass destruction that this thing is going to change this condition of war, and thereby change the whole structure of international politics. It could either end the war altogether or destroy the world. The former gave them hope.”

The grand lesson, as Rhodes sees it, is that you may build an apocalyptic weapon that turns out to be a flawed agent of precarious peace. But the opposite could also be true: A tool designed to perpetuate human flourishing might bring about catastrophe. And so for Rhodes, the true fear regarding AI is simply that we are on an undefined path, that we are moving too fast and creating systems that may work against their intended purposes: instruments of productivity that end up destroying jobs; synthetic media that ultimately blur the lines between human-made and machine-made, between fact and hallucination. “What is most disturbing about it is how little time society will have to absorb and adapt to it,” Rhodes said of AI’s ascent.

On our way out of his office, Rhodes pauses to show me a jar the size of a film canister with what looks like some rocks in it. A faded typewritten label says Trinitite, the name for the residue scraped from the desert floor in New Mexico after the Trinity nuclear-bomb test in July 1945. The blast was so hot that it turned the sand to glass. “Pretty spooky, isn’t it?” Rhodes said with a smile. It’s clear to me now why he keeps these relics so close. They are the physical manifestation of Bohr’s philosophy and the through line of much of Rhodes’s work—complementarity as interior design. A reminder that the joy and the horror of both the natural world and the one we build for ourselves is the fact that very little behaves as we expect it to. Try as we may, we can’t observe it all simultaneously. It is a reminder of the excitement and terror inherent in the unsolvable mystery that is being alive.

When you buy a book using a link on this page, we receive a commission. Thank you for supporting The Atlantic.